Quick Guide to Data Serialization Methods

Aug 5, 2024, 7 AM

Pynxo

1. What is Data Serialization?

2. Common Data Serialization Formats

2.1 CSV

2.2 XML

2.3 JSON

2.4 Protocol Buffers

2.5 Parquet

3. Code Examples

4. Conclusion

In the era of big data, efficient data storage and transfer have become critical. One fundamental aspect of data management is serialization, a process that translates data structures into a format that can be easily stored and transmitted. This article explores the most widely used data serialization formats, explaining their features, advantages, and providing practical code examples.

1. What is Data Serialization?

Data serialization is the process of converting structured data into a format that can be easily stored or transmitted and then reconstructed later. This process is essential for various operations, including data storage, inter-process communication, and network data exchange.

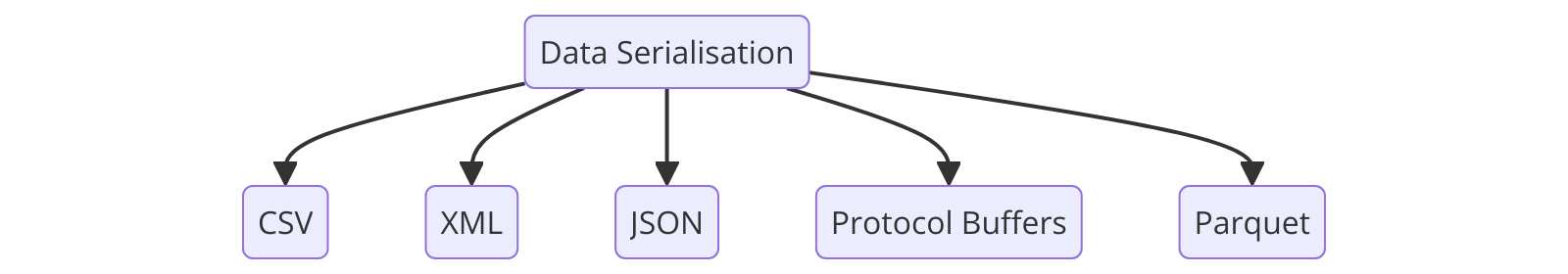

2. Common Data Serialization Formats

2.1 CSV (Comma-Separated Values)

CSV is one of the simplest and most common data serialization formats. It represents data in a tabular format, where each line corresponds to a row, and each value is separated by a comma.

Advantages:

- Human-readable and easy to edit with simple text editors.

- Supported by many applications and programming languages.

Disadvantages:

- Limited to simple data structures.

- No support for data types beyond strings.

2.2 XML (eXtensible Markup Language)

XML is a flexible, structured markup language that encodes data in a readable format using tags.

Advantages:

- Highly readable and self-descriptive.

- Supports complex nested structures and data types.

Disadvantages:

- Verbose, leading to larger file sizes.

- Parsing can be slower compared to other formats.

2.3 JSON (JavaScript Object Notation)

JSON is a lightweight data interchange format that is easy for humans to read and write and easy for machines to parse and generate.

Advantages:

- Less verbose than XML.

- Native support in JavaScript and many other programming languages.

Disadvantages:

- Limited support for complex data types like dates and binary data.

2.4 Protocol Buffers (Protobuf)

Protobuf is a language-neutral, platform-neutral, extensible mechanism for serializing structured data, developed by Google.

Advantages:

- Efficient and compact binary format.

- Supports schema evolution.

Disadvantages:

- Requires defining message schemas in a .proto file.

- Less human-readable than text-based formats.

2.5 Parquet

Parquet is a columnar storage file format optimized for use with big data processing frameworks like Apache Hadoop and Apache Spark.

Advantages:

- Efficient storage and retrieval of large datasets.

- Optimized for read-heavy operations.

Disadvantages:

- Complex to implement compared to simpler formats like CSV.

- Primarily used within big data ecosystems.

3. Code Examples

CSV Representation

name, age, city

John, 30, New York

Anna, 22, London

Mike, 32, San FranciscoThis CSV file represents a simple table with columns for name, age, and city. Each row contains the values for one record, separated by commas.

XML Representation

<data>

<person>

<name>John</name>

<age>30</age>

<city>New York</city>

</person>

<person>

<name>Anna</name>

<age>22</age>

<city>London</city>

</person>

<person>

<name>Mike</name>

<age>32</age>

<city>San Francisco</city>

</person>

</data>This XML file represents the same data as the CSV example but uses nested tags to provide structure and hierarchy. There are also options to accept only specific structured xml-Documents called XSD.

JSON Representation

{

"title": "Persons",

"array": [

{"name": "John", "age": 30, "city": "New York"},

{"name": "Anna", "age": 22, "city": "London"},

{"name": "Mike", "age": 32, "city": "San Francisco"}

],

}This JSON file represents Persons. With a same named title and an array that includes the actual data.

Protobuf Representation

Protobuf is a binary format, so the file content is not human-readable. However, here's how the data looks when encoded and decoded:

.proto Schema File:

syntax = "proto3";

message Person {

string name = 1;

int32 age = 2;

string city = 3;

}

message People {

repeated Person people = 1;

}Encoded Data (Binary)

The binary file will contain the serialized data in a compact, non-readable form.

Decoded Data (Text Representation for Understanding)

people {

name: "John"

age: 30

city: "New York"

}

people {

name: "Anna"

age: 22

city: "London"

}

people {

name: "Mike"

age: 32

city: "San Francisco"

}Parquet Representation

Parquet is a binary columnar format, and thus the file content is not human-readable. However, here's how you can conceptually understand the storage structure:

Row Group 1:

Column "name": ["John", "Anna","Mike"]

Column "age": [30, 22, 32]

Column "city": ["New York", "London", "San Francisco"]The data is stored in a columnar manner, optimized for efficient queries and storage.

4. Conclusion

Data serialization is a crucial aspect of modern data processing, enabling efficient storage, transfer, and manipulation of data. Each serialization format has its strengths and use cases, from the simplicity of CSV to the efficiency of Parquet for large-scale data processing. By understanding these formats and their file representations, you can choose the most suitable method for your specific data needs.